Robotic Guide Dog Speech Interface

Summer 2021 Internship at UC Berkeley - Mechanical Engineering Department

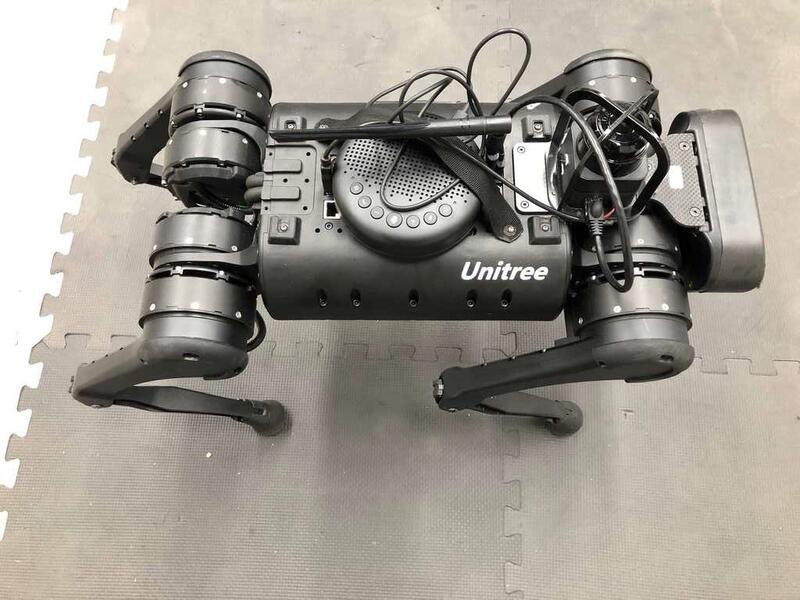

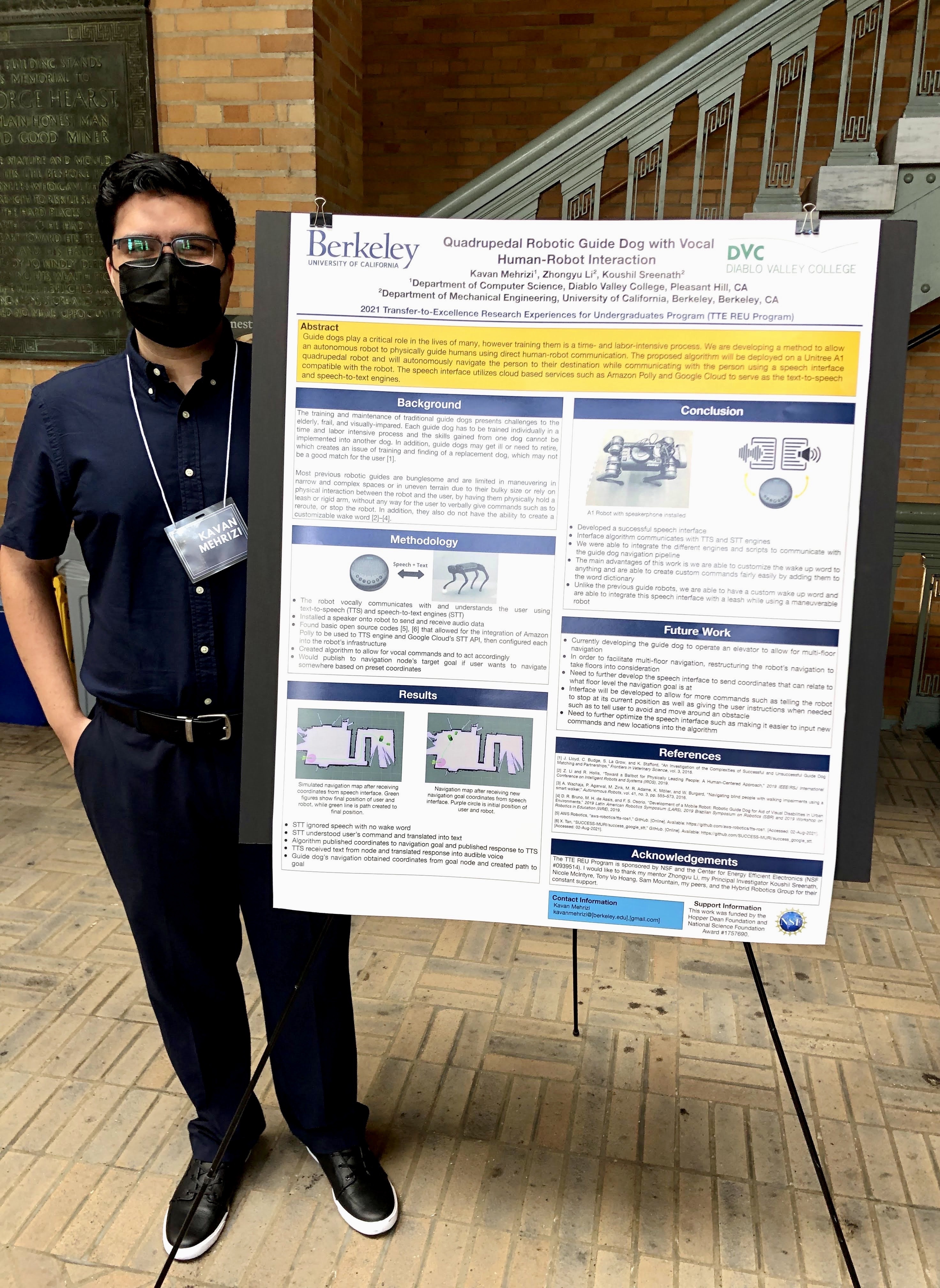

In the summer of 2021, I participated in UC Berkeley’s Transfer-to-Excellence REU program in the Hybrid Robotics Group under Professor Koushil Sreenath where I conducted research to allow an autonomous robot to physically guide humans.

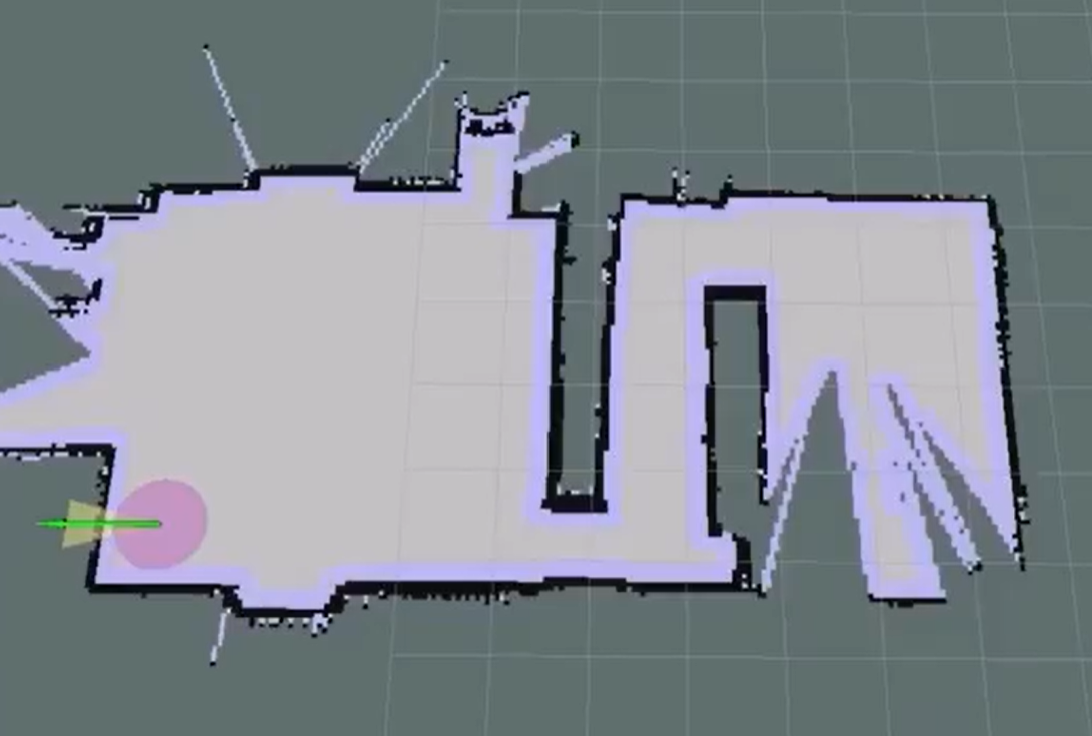

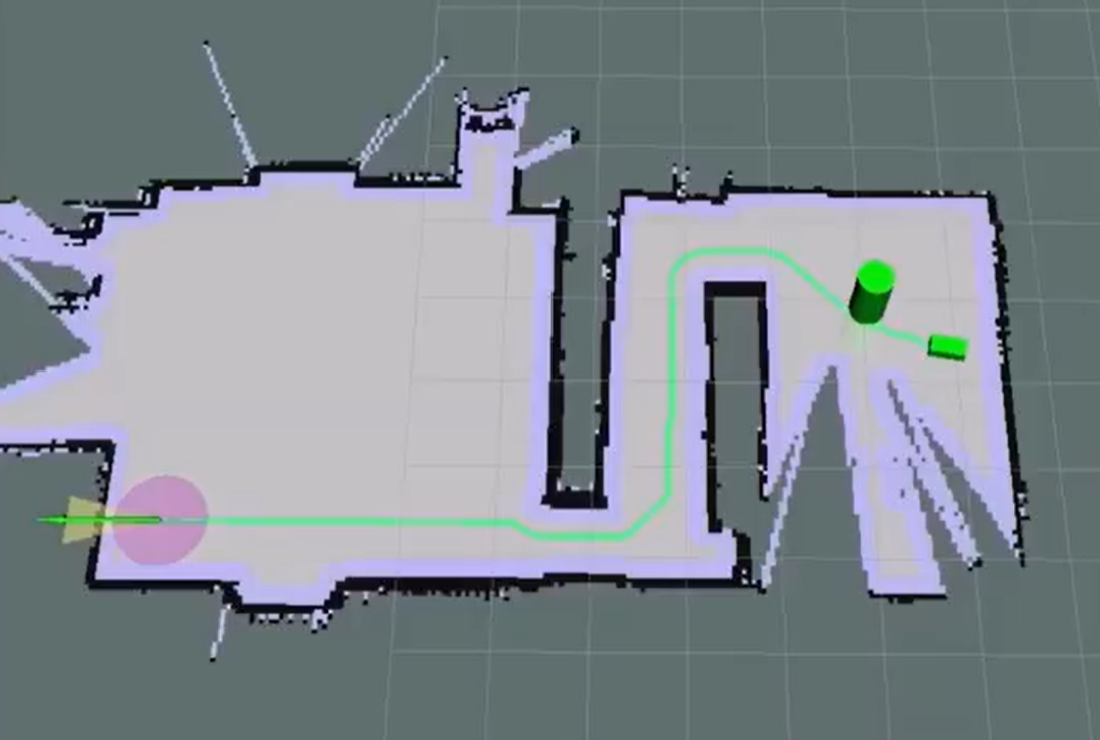

My role in the research team was to independently create a speech interface on their proposed robotic guide dog to allow an impaired/elderly person being led to verbally communicate with the robot, allowing for explicit interaction between the two. I utilized Python and C++ for the algorithms as well as AWS Polly and Google Cloud Speech-to-Text APIs while integrating all of this into the guide dog’s infrastructure which was in ROS. I have written a research paper that was cited by Google DeepMind and have presented my research at multiple symposiums across UC Berkeley. This research program gave me my first taste of what research is like and allowed me to apply my skills to something I am passionate about.